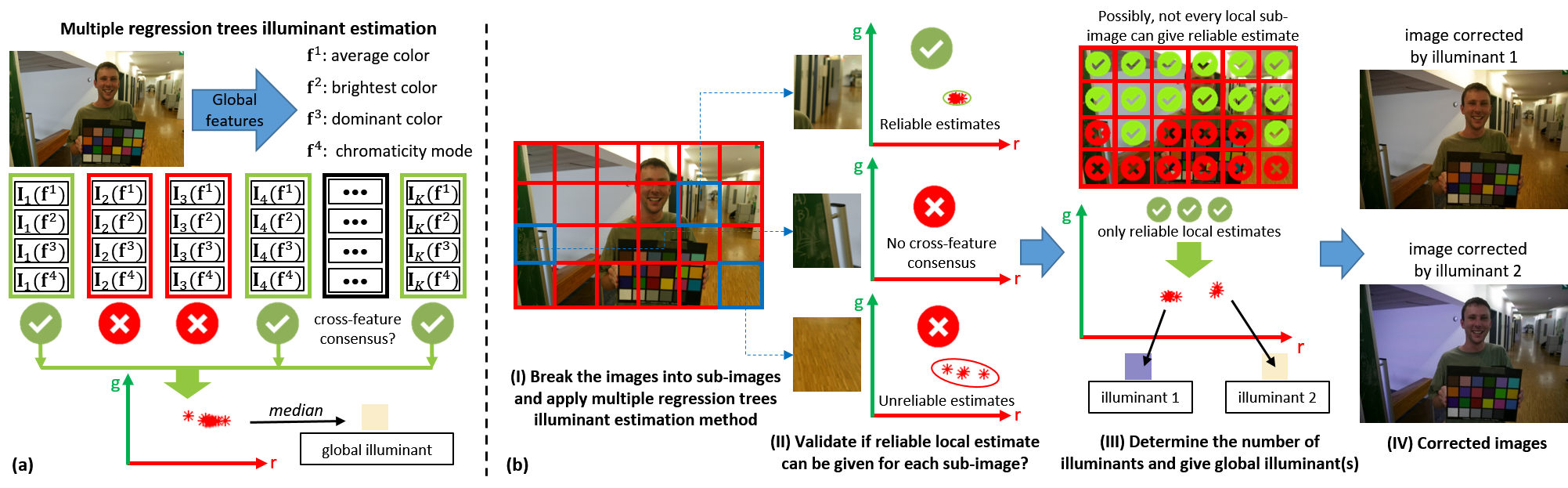

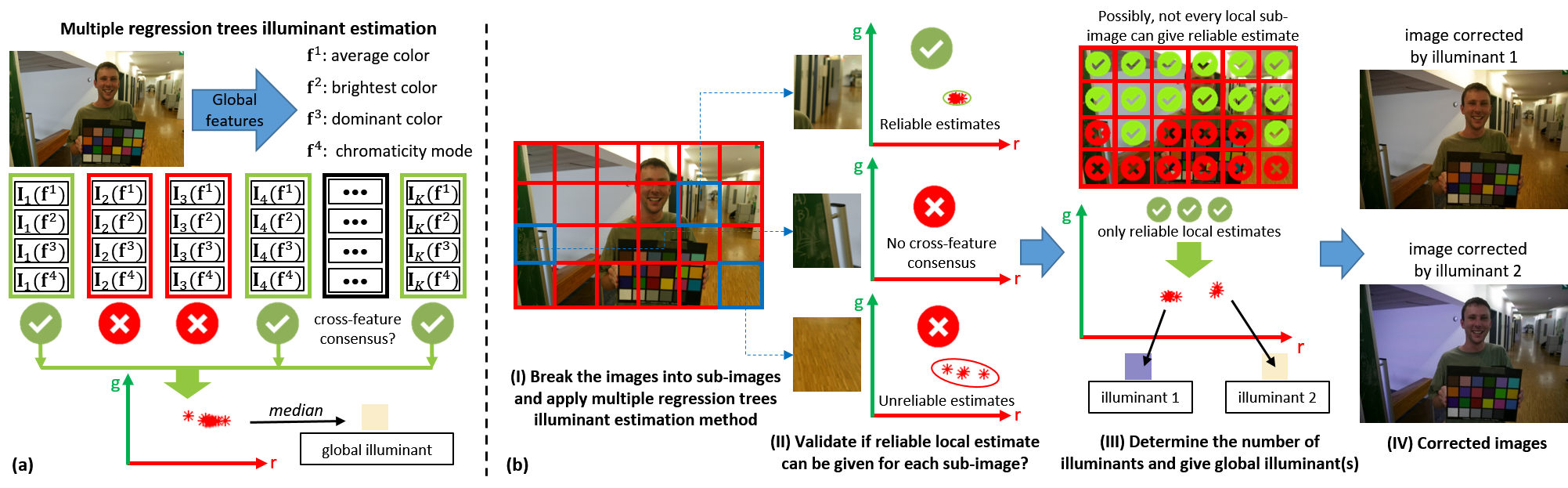

This paper examines the problem of white-balance correction when a scene contains two illuminations. This is a two step process: 1) estimate the two illuminants; and 2) correct the image. Existing methods attempt to estimate a spatially varying illumination map, however, results are error prone and the resulting illumination maps are too low-resolution to be used for proper spatially varying white-balance correction. In addition, the spatially varying nature of these methods make them computationally intensive. We show that this problem can be effectively addressed by not attempting to obtain a spatially varying illumination map, but instead by performing illumination estimation on large sub-regions of the image. Our approach is able to detect when distinct illuminations are present in the image and accurately measure these illuminants. Since our proposed strategy is not suitable for spatially varying image correction, a user study is performed to see if there is a preference for how the image should be corrected when two illuminants are present, but only a global correction can be applied. The user study shows that when the illuminations are distinct, there is a preference for the outdoor illumination to be corrected resulting in warmer final result. We use these collective findings to demonstrate an effective two illuminant estimation scheme that produces corrected images that users prefer.

| METHOD | TYPE | TOTAL NUMBER OF IMAGES | DETECTED | F-MEASURE | ERROR FOR CORRECT DETECTIONS | ERROR FOR INCORRECT DETECTIONS | ||

|---|---|---|---|---|---|---|---|---|

| ILLUMINANT 1 | ILLUMINANT 2 | MIN ERORR | MAX ERORR | |||||

| Proposed (4 × 6) | Single | 502 | 477 | 0.9578 | 1.34 | - | 1.79 | 11.28 |

| Double | 66 | 49 | 0.7000 | 1.87 | 2.05 | 2.33 | 15.48 | |

| Proposed (8 × 12) | Single | 502 | 464 | 0.9450 | 1.53 | - | 2.35 | 14.77 |

| Double | 66 | 50 | 0.6494 | 2.32 | 2.51 | 2.33 | 16.48 | |

| Locally applied Grey-world | Single | 502 | 352 | 0.8000 | 4.60 | - | 4.31 | 14.27 |

| Double | 66 | 40 | 0.3125 | 8.89 | 6.04 | 4.22 | 13.29 | |

| Locally applied Corrected-Moment | Single | 502 | 393 | 0.8656 | 1.88 | - | 2.76 | 14.16 |

| Double | 66 | 53 | 0.4649 | 3.83 | 7.64 | 2.87 | 10.32 | |

| Adapted Gijsenij et al. | Single | 502 | 256 | 0.6615 | 4.92 | - | 4.57 | 18.03 |

| Double | 66 | 50 | 0.2762 | 9.23 | 7.44 | 4.94 | 13.56 | |

(a) User preferences for the indoor (L1) and outdoor illuminant (L5) corrections. (b) User preferences for each of the 5 illuminants, over the two categories (distinct and similar illuminants). Error bars represent the 95% confidence intervals.

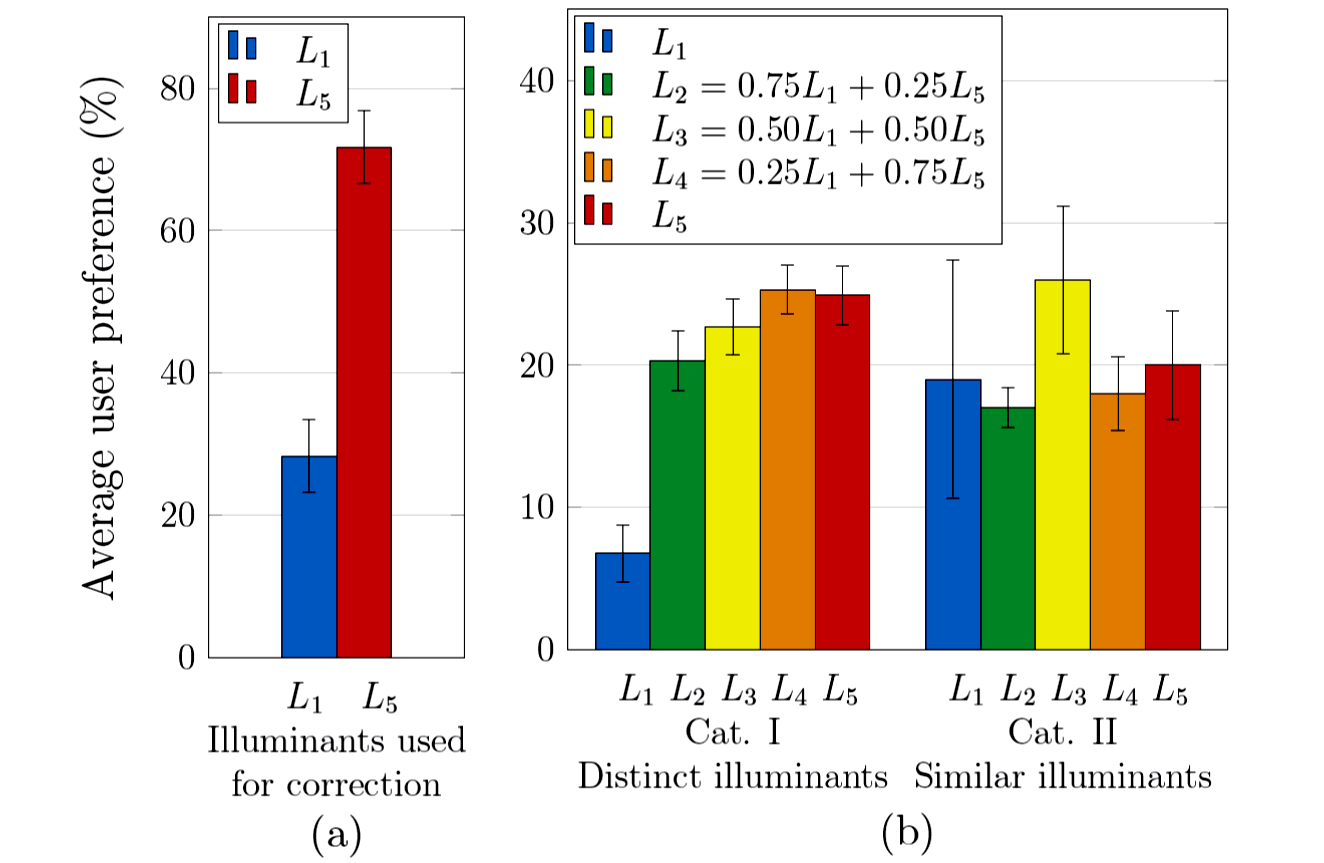

Top three images are from the Gehler-Shi data set while the bottom three are from the RAISE data set. For the images from the Gehler-Shi data set, the Corrected moment result is compared and for the images from the RAISE data set, the weighted Grey-edge result is compared.

Two Illuminant Estimation and User Correction Preference, CVPR, 2016. (PDF)

The proposed algorithm is straightforward to implement given the source code from the related work:

Effective Learning-Based Illuminant Estimation Using Simple Features

We prepared two data sets of two-illuminant images taken from existing data sets:

1. We identified 66 two-illuminant images in the Gehler-Shi data set. [Download]

2. We identified 34 two-illuminant images in the RAISE data set. [Download]

For using the above data sets, please appropriately cite the corresponding publications:

1. P. V. Gehler, C. Rother, A. Blake, T. Minka, and T. Sharp. Bayesian color constancy revisited. In CVPR, 2008.

L. Shi and B. Funt. Re-processed version of the gehler color

constancy dataset of 568 images. Accessed from here. Last accessed May 2016.

2. D.-T. Dang-Nguyen, C. Pasquini, V. Conotter, and G. Boato. Raise: A raw images dataset for digital image forensics. In ACM Multimedia Systems (MMSys), 2015.

This work was supported in part by an Adobe Research Gift Award.