What Else Can Fool Deep Learning?

Addressing Color Constancy Errors on Deep Neural Network Performance

Mahmoud Afifi1 and Michael S. Brown1,2

1York University, 2Samsung Research

Abstract

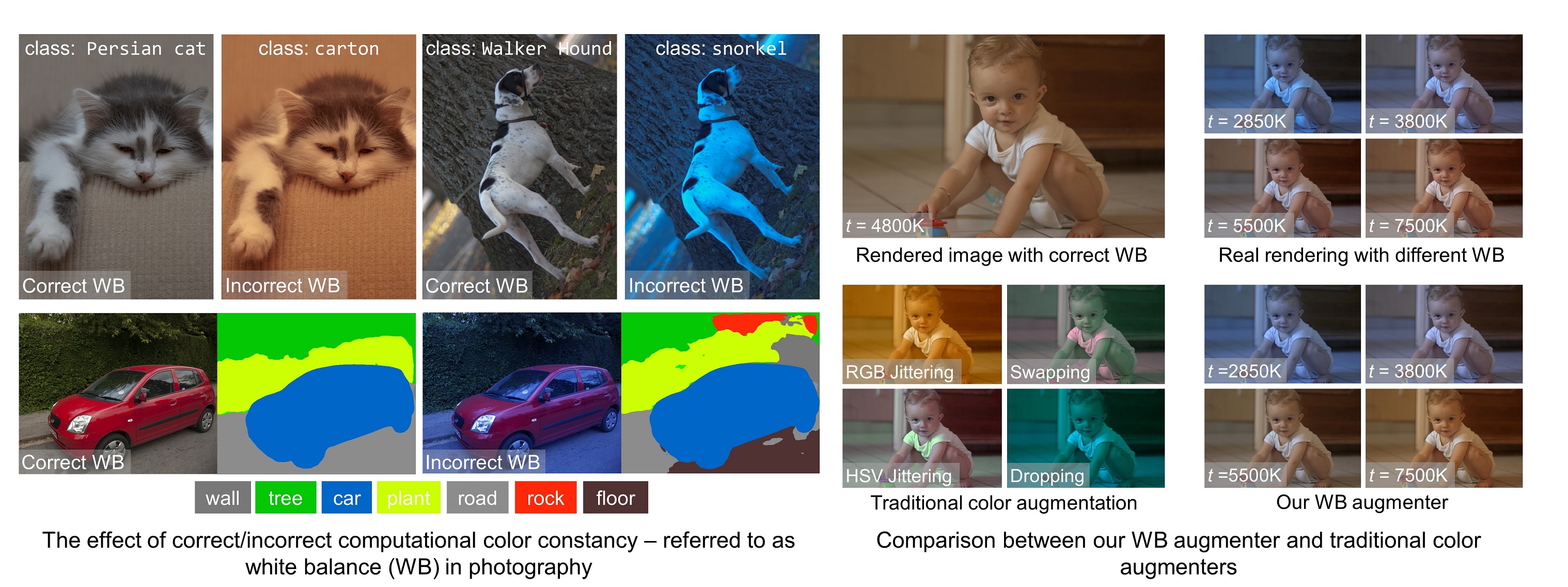

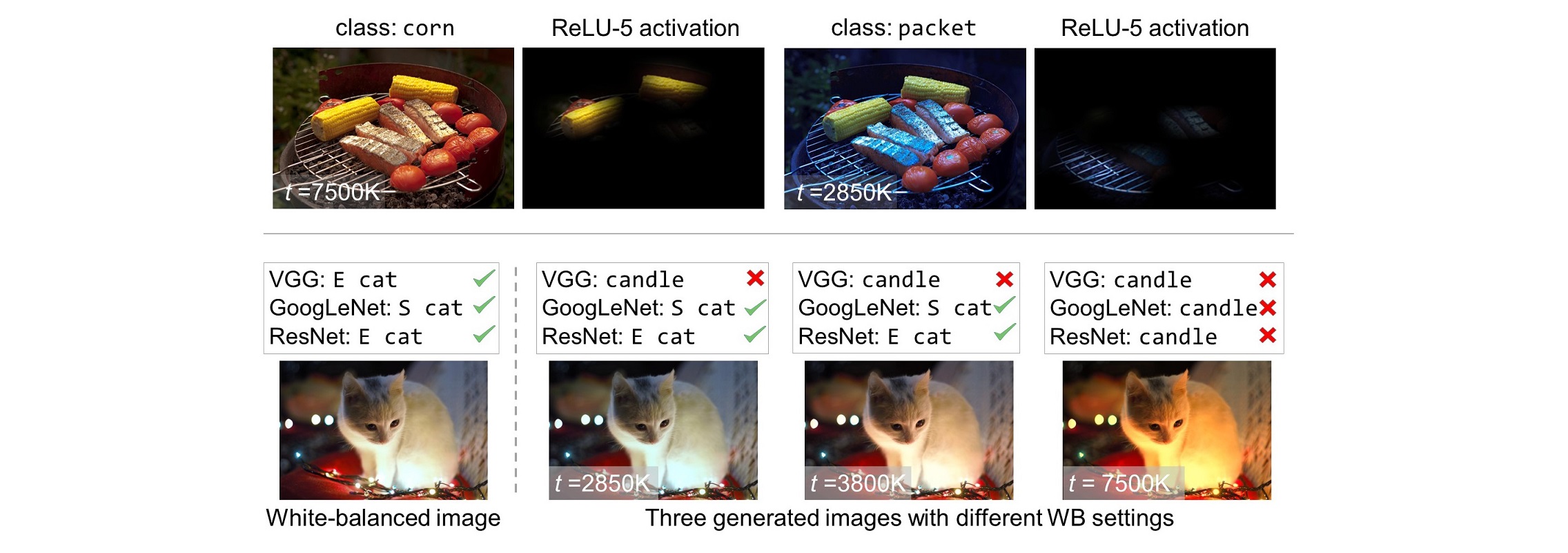

There is active research targeting local image manipulations that can fool

deep neural networks (DNNs) into producing incorrect results. This paper examines a type of global image manipulation that can produce similar

adverse effects. Specifically, we explore how strong color casts caused by incorrectly applied computational color constancy – referred to as

white balance (WB) in photography – negatively impact the performance of DNNs targeting image segmentation and classification.

In addition, we discuss how existing image augmentation methods used to improve the robustness of DNNs are not well suited for modeling WB errors.

To address this problem, a novel augmentation method is proposed that can emulate accurate color constancy degradation. We also explore pre-processing

training and testing images with a recent WB correction algorithm to reduce the effects of incorrectly white-balanced images.

We examine both augmentation and pre-processing strategies on different datasets and demonstrate notable improvements

on the CIFAR-10,

CIFAR-100,

and ADE20K datasets.

Effects of WB Errors on Pre-trained DNNs

We examine how errors related to computational color

constancy can adversely affect DNNs focused on image classification and semantic segmentation.

In addition, we show that image augmentation strategies used to expand the variation of training images are not well suited to mimic the

type of image degradation caused by color constancy errors.

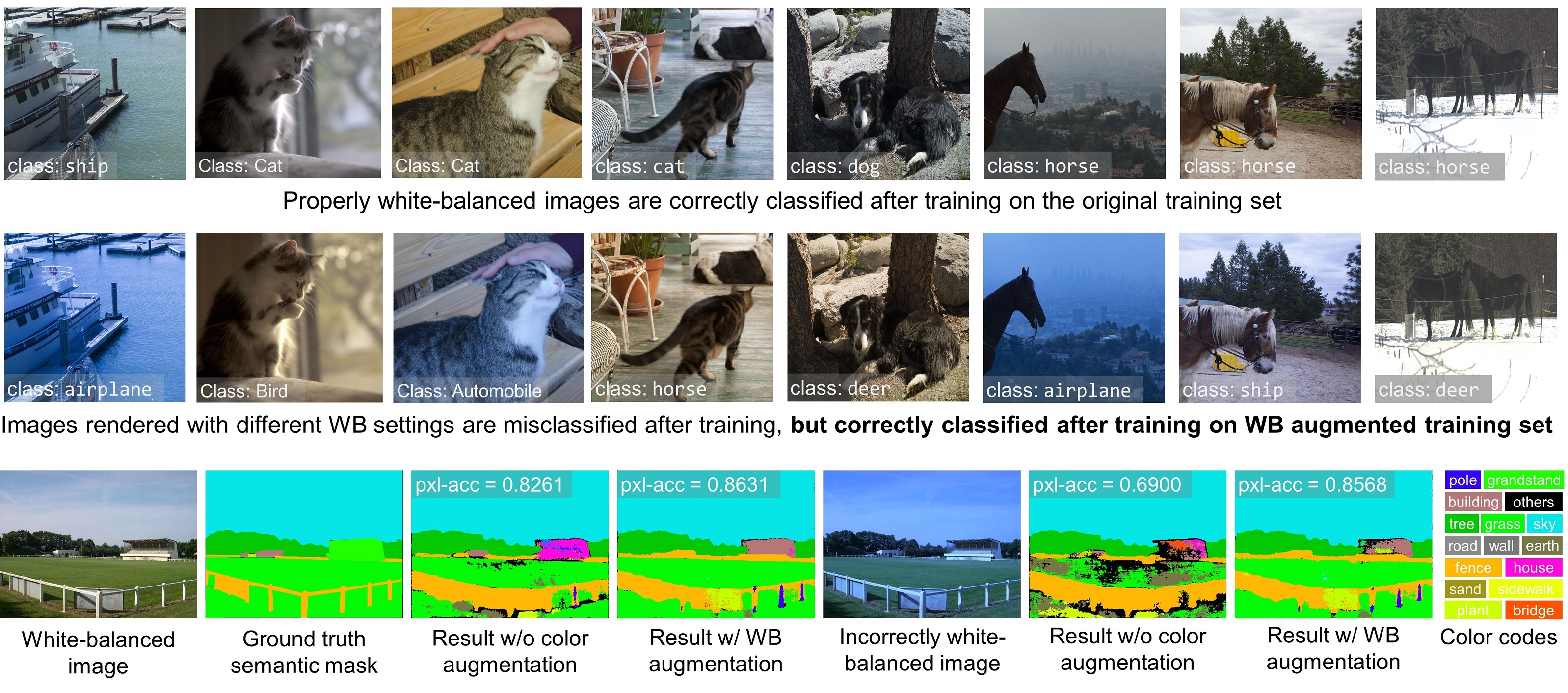

Training with WB augmentation

We introduce a novel augmentation method that can

accurately emulate realistic color constancy degradation.

We also examine a newly proposed

WB correction method

to pre-process testing and training images. Experiments on CIFAR-10, CIFAR-100,

and the ADE20K datasets using the proposed augmentation and

pre-processing correction demonstrate notable improvements to test image inputs with color constancy errors.

Files

Files

| |

|

|

|

| Paper | Supplementary Materials | Poster | Code and Dataset |

BibTeX

@inproceedings{Afifi2019WBEmulation,

booktitle = {International Conference on Computer Vision (ICCV)},

title = {What Else Can Fool Deep Learning? Addressing Color Constancy Errors on Deep Neural Network Performance},

author = {Afifi, Mahmoud and Brown, Michael S.},

year = {2019},

}